Linda Gaasø

Hue

Arne Skorstad

Tone Kraakenes

Roxar

Oil and gas companies always strive to make the best possible reservoir management decisions, a task that requires them to understand and quantify uncertainties and how these uncertainties are affecting their decisions. In attempting to ascertain risk, however, great uncertainty pertaining to the geological data and thus the description of the reservoir, as well as time-consuming workflows, are current bottlenecks impeding the ability of oil and gas companies to address their business needs.

New technologies and approaches enable faster, more accurate, and more intuitive modeling to help today's geophysicists make better decisions.

Earth modeling software has come a long way since its appearance in the 1980s. Reservoir modeling has become common practice in making economic decisions and assessing risk related to a reservoir during several stages of the reservoir's life, using detailed modeling to investigate the reserve estimates and its uncertainty.

Despite the progress, conventional reservoir modeling workflows are optimized around oil paradigms developed in the 1980 and 1990s. The workflows are typically segmented and "siloed" within organizations, relying on a single model that becomes the basis for all business decisions for reservoir management. These models are not equipped to quantify the uncertainty associated with every piece ofgeological data (from migration, time picking, time-to-depth conversion, etc.). The disjointed processes may take many months – from initial model concepts to flow simulation – for multiple reasons, one of which is the outmoded technologies used to drive these processes. When faced with the challenges of increasingly remote and geologically complex reservoirs, conventional workflows can fall short.

There are two main types of data used in reservoir modeling, data from seismic acquisition and drilling data (well logs). Geophysicists typically handle the data and interpretation, and geomodelers try to turn those interpretations into plausible reservoir models. This time-consuming and resource-intensive approach requires strict quality control and multiple iterations. Data is often ignored, or too pretentious interpretations are made based on poor seismic data, which narrows the possibility of obtaining a realistic model.

Interpreters still frequently have to rely on manual work and hand editing of fault- and horizon-networks, usually causing the reservoir geometry to be fixed to a single interpretation in the history-matching workflows. This approach makes updating structural models a major bottleneck, both due to the lack of methods and tools for repeatable and automatic modeling workflows, and for efficient handling of uncertainties.

As a result of the traditional manual work and time-consuming workflows, some oil fields go as long as three years between modeling efforts, even though the average rate of progression on drilling is a few meters per day. With traditional modeling, it takes several days or even weeks to construct a complete reservoir model, and at least several hours to partially update it. Earth models currently comprise dozens of surfaces and hundreds of faults, which means users cannot and should not update an earth model completely by hand. Instead they should rely on software that allows for (semi-) automated procedures, freeing the user from certain modeling tasks.

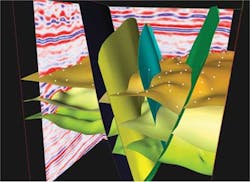

Instead of a traditional serial workflow for interpretation and geomodeling, Roxar pioneered "model-driven interpretation." This allows geoscientists to guide and update a structural 3D model, which is geologically consistent, directly from the data. This approach allows geoscientists to focus efforts where the model needs more details. In some areas, such as on a horizon depth, neighboring data tell the same story; they are all representative. In other areas, for example close to faults, some data points are more important than others, namely those indicating where the faults create a discontinuity horizon depth. Geoscientists should focus on these critical data points, instead of on data not altering the model. In the extreme, having a correlation of 1.0 between two variables imply that keeping one of these is sufficient, as the other value is known, and it does not matter which of these the geoscientists choose to keep. In practice, however, correlations between data generally are not 1.0, leading most geoscientists to ask: at what correlation, or redundancy level, should we simplify our data model, and thereby save time and money in updating it by omitting this extra data? The modeling efforts in the oil and gas industry should be purpose-driven. This is the key behind model-driven interpretation.

Displaying 1/2 Page 1,2Next>

View Article as Single page