New tools aid in managing resources and optimizing productivity

Bill Bartling

SGI

The operational conflict between a rapidly declining workforce and unprecedented costly investments caused by an exponentially expanding database demands a break from tradition and a search for the next technology revolution.

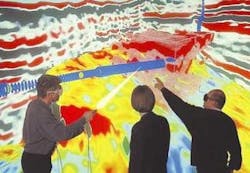

Data fusion and visualization allow data to be presented to the decision teams at Norsk Hydro. Source: Norsk Hydro

null

The energy industry is no stranger to technology revolutions. Past breakthroughs range from unified petrotechnical databases through integrated applications, 3D analytical methods, large volume visualization, and advanced drilling and completions. Most of the technical work force cut its teeth in the industry at a time when "deepwater" meant greater than 300 ft and 3D seismic surveys were only for "special" areas where oil shrewdly cowered in the shadows of state-of-the-art 2D imagery. Today, 3D is routine, 4D is taking hold, and 10,000 ft of water is easily conquered.

Next revolution

The next revolution will be in the technical office work process, dramatically increasing the analysis, knowledge, and action bandwidth of the technical staff and increasing its "velocity of insight."

The gating factor for increasing financial performance is the rate at which information can be transformed into knowledge and action. Four factors define the information technology platform to achieve this:

- Modern, robust, high-speed, secure, and easily managed data storage and delivery

- High-productivity computing to transform data into analysis-friendly forms

- Data fusion and visualization to present the data to decision teams

- Real-time, anywhere, anytime collaboration, bringing the right skills to the problem to optimize decisions.

This revolution will deliver the virtual oil company and, upon its broad shoulders, unprecedented operational efficiencies, optimization of human resources, and, by extension, delivery of improved financial performance. But its utility does not stop there. It is also the catalyst for implementing the digital oil field, encompassing the transformation of real-time data from downhole and uphole sensors into fully populated digital oilfield models describing the financial enterprise – the oilfield asset. Technology adapted from analogous applications, such as command and control decision analysis centers, widely used in military operations, will accelerate its adaptation to the oilfield.

Modern, robust, high-speed, secure, and easily managed data storage and delivery enable visualization for reservoir simulation and exploration at the BP Center for Visualization, University of Colorado at Boulder.

null

Will this gift be wrapped in shiny paper and dressed with a red bow? As futuristic as it sounds, it is not as far off as it may seem. Many of the base technologies are in the market today, and research and development activities are in place to deliver the next-generation systems that will fuel it. The industry's financial pressures will dictate the rate at which investments are made that will deliver the remainder of the tools not yet deployed in the market.

Key technologies

What can we have now, and what do we have to wait for? Let's tear the wrappers off of a few of these packages and test drive a few of the key technologies.

Visual area networking: This innovative concept is an architecture of connectivity – an enterprise of integrated data access, processing, networking, analysis, and visualization driving a revolutionary work process to improve bottom-line business results dramatically.

New collaborative capabilities were recently released in an advanced commercial state that link asset teams across long distances on standard networks to share in analysis sessions involving ultra-large data models. Several installations have demonstrated the robustness of this technology, which includes support for 802.11x wireless connectivity and platform-independent connectivity. Near-term developments are designed to improve performance, especially over long-distance, low-bandwidth corporate networks. Connecting teams on a gigabit LAN is a highly effective tool when all the necessary team members are on this network. "But my reservoir engineer is in Baku, and my project is in London," you might well protest. Stay tuned. This is on the horizon.

Fiber optic sensing: Many of the biggest hurdles to fiber optic sensing deployment have been cleared, including effective coatings to protect glass sensors in hot, caustic environments and systems to retrieve and re-deploy sensors without pulling the well. Research in new capabilities, deployments, and protections that will extend the life of sensors and broaden their analytical breadth are aggressively underway. Recent acquisitions of several start-up fiber optic companies by mainstream, industry-leading service providers confirm that this technology has gained prominence in the industry.

Broadband networks: The world will soon see access to incredible communication bandwidths at commodity pricing. Today, we see a world in which 75% of installed fiber-optic cabling is dark, and, of the lit fiber, only 35% of that capacity is regularly used. Financial pressures on the telecom industry will result in the same outcome that those same pressures have had on IT and energy industries – consolidation and dramatically increased efficiencies that will drive prices down and access up while offering new, innovative schemes to provide alternative access to a spectrum of users.

Affordable, lit fiber is only a part of the equation. Recent major breakthroughs in optical switching and specialized appliances that transmit ultra-large graphical models rendered on supercomputers in the main office, over unprecedented distance with near speed-of-light latency are in the market today. These products are revolutionizing connectivity of geographically distributed work forces and are playing an integral role in the construction of visual area networks.

Data storage and delivery: The increase in data volume and complexity that underpins this new business model is nonlinearly related to the improvement in results. Storage hardware vendors observe that the capacity of disks sold in the past 12 months alone equals the total capacity sold in all years until this recent period. The world may be awash in data, yet, for oil companies to prosper in the next generation, their data volumes will need to dwarf what they struggle to manage today.

Will traditional, transactional-based data storage and delivery technologies spell success? Will systems that struggle with megabytes manage the information fundamental to changing the world? Will systems designed to deal with kilobit transactions scale to manage gigabits, terabits, petabits, or even exabits? Just like the 486 PC that exceeded all our expectations in 1992 cannot even hold the operating systems required for today's tasks, we require advanced data storage and delivery systems to manage the data challenges of tomorrow.

Yes, there is a shiny, wrapped package with this system too. Once again, we look outside the energy industry to plow the road and deliver mature, robust solutions that we need only install and adapt. The intelligence community demands highly secure data systems capable of handling file systems in the exabytes and file sizes in the terabytes. A system operates today that stores, manages, and delivers the petabytes of new data collected daily by satellite surveillance.

The newest revolution is this system's ability to extend the file system geographically across the enterprise. By using newly developed, high-bandwidth connectivity between data storage installations, single-file systems can span the enterprise, providing all users with a common view to the only copy of the data and its interpretation accessible from any operating system, all at the speed of light.

High productivity computing: (Exponential increase in data) + (dramatically shortened cycle time) + (real-time data fusion) = (scalable, high-productivity computing).

As we put more demands on technical computing systems to increase the "velocity of insight," each of the components must remain optimized, lest we merely move the bottleneck. High-productivity computing has historically been at the core of improved performance. Today, with the increase in data, the decrease in skilled staff, and the increase in investor expectations, companies will be differentiated by the speed at which they act on their optimized investment portfolio. Scaled and gridded computational systems provide access to flexible, proven and easy-to-use and administer supercomputing power.

Large data visualization: What's in a picture? Fact, interpolation, imagination, creation, fears, dreams, the past, and the future. These aren't just pretty pictures anymore; they are the sum of knowledge, data, and action collected, analyzed and implemented by the asset team. The visualization system holds the "evolving technical knowledge base." New collaborative systems extend the reach to include distant experts, critical symbionts in optimizing financial performance.

Many components are integrated into the visual area networking concept, including (clockwise from bottom right) central HPC/storage of data, transfer of graphical data back and forth, to and from the central server and the oil field, real-time data staging, and decision-making use of the resulting graphical data both at company headquarters and at regional offices.

null

The human mind can only consume the sheer volume of data via robust digital visual systems, taking advantage of millions of years of evolution that adapted man to thrive in a visual, 4D world. Models of the subsurface of the earth created from hundreds of gigabytes of data render spatially accurate, highly complex images accessible in near real time by individuals and teams alike, with large formats bringing the most comfortable access to the views.

Real-time data fusion: Real-time decisions require real-time data incorporated into complex decision systems. As valuable a lesson as the past might be, it is neither a description of the present nor a predictor of tomorrow. Real-time data, fused and visualized with static data and historical trends, is a vehicle for early intervention into sub-optimal operations.

Operational models are built from the best data and assumptions available. But producing a field changes the data upon which the original model was built and evolves the assumptions to a more mature level of the field. Real-time data keeps the model current and as a result, they keep the decision system current.

Companies invoking these systems as the basis of real-time reservoir management have expectations of paying for them with cost savings from improved operations and, on top of that, subsequently increasing recovery factors by as much as several hundred percent. In addition, barrels recovered from this process later in the life of a field come with much higher margins (due to reduced debt, depreciation, and amortization), padding the bottom line in good years and protecting margins from downward price pressures in bad years.

Several systems have entered the market in recent years to deliver real-time drilling and gauging information. But in spite of their availability for several years, none have made it to the mainstream, delivering reservoir behavior data in real time and fusing data with live reservoir models as it is collected. Pilot projects with these new systems are in progress in a few companies, paving the way to widespread usage.

Nanotechnologies and sensor nets: Nano- technologies are evolving quickly in R&D labs and will drive wireless, distributed, remote data collection of the future. Devices no larger than a 1-mm cube will be self-contained collection, processing, and transmitting devices sensing a variety of environmental parameters, such as induction, pressure, density, sound, and chemical composition. No, that's not Rod Serling at the signpost up ahead. These are very close to delivery from a number of laboratories.

Conclusion

Tomorrow's business challenges require innovation and modernization of infrastructure. Revolutionary systems are commercial today that will serve as the platform for tomorrow's prosperity. Progressive companies will champion the first installations and serve as the standard for the industry, using them to drive costs down, expand opportunities and, as a result, drive profits up.

Author

Geologist Bill Bartling, director of Global Energy Solutions for SGI, is responsible for SGI's strategy and position in the oil and gas industry.