Extended HPC can optimize E&P

Mark M. Ades - Microsoft; Jim Thom - JOA Oil and Gas

High-performance computing (HPC) is becoming more affordable and easier to use. Combined with today’s volume interpretation, modeling, and simulation software, cluster-based HPC is entering the oil and gas mainstream. Exploration and production companies are using HPC for exploration and to tackle complex, production-oriented algorithmic challenges.

Cluster-based computing and advanced reservoir modeling are used to drive production and to save costs in mature and offshore fields.

The nature of computing in the oil and gas sector is changing. Advances in computing and modeling systems and the need to find reserves under more challenging geological conditions, and to affordably produce older and more challenging fields, drives E&P companies to leverage HPC and sophisticated modeling and simulation systems. The goal is to tackle geomechanics and uncertainty management and to more effectively manage complex problems in mature fields.

HPC is not new to the industry. Petroleum companies have used mainframe supercomputers since the 1960s. But given the time and cost of traditional “backroom” mainframe runs, those early generation supercomputing systems typically were used only for the most promising projects.

Smart High performance computing ... matrix-free nonlinear CG solver

Those limitations began to diminish with the emergence of cluster-based computing technologies that harness the networked power of multiple smaller and more affordable commodity hardware pieces. Networked clusters, combined with the continued refinement of modeling and simulation software, allow scientists, engineers, and risk managers to better manage and evaluate the amounts of data needed to conduct many upstream activities.

By giving end users easy, low-cost access to HPC – allowing them to run terabytes of information in desk-side or server environments – cluster-oriented computing is now used successfully in a number of exploration-related applications. In seismic processing, for example, clustered computers have been used for some time to tackle prestack time and depth migrations, reverse time migrations, Radon demultiples, and other analytic challenges.

In a 2008 survey of the oil and gas industry conducted for Microsoft, researchers found that more than 40% of compute-intensive scientific applications take from a full day to a week to stabilize results, and that most applications require four or more iterations. Almost half of the respondents said they do not have enough processing power on their desktops to complete complex applications. The survey also showed that 89% of engineers and geoscientists believe better access to HPC can streamline these challenges and increase, or at least accelerate oil and gas production.

In response, HPC is emerging as a mainstream technical tool in the oil and gas business. Fueled by new and more economic server-based cluster computing technologies, and by modeling/simulation software developed specifically for exploration and production, supercomputing can reach the desktop

As a result, end users are using HPC across the oil and gas spectrum. Companies are finding new ways to use supercomputing in exploration, while applying cluster-based computing to production challenges in mature and offshore fields. At the same time, new and innovative volume interpretation, modeling, and simulation technologies are being developed to take advantage of this compute power.

To fully appreciate the significance of this, here are two of the most prominent uses of HPC in the production setting.

Production arena applications

The rise in the price of oil has made mature fields increasingly attractive targets. Driven to optimize the return on the development of mature fields while controlling operating costs, producers increasingly apply the capabilities of cluster-based HPC to production applications.

Two key applications – Geomechanic Simulations and Dynamic Uncertainty Management – are especially relevant to production in mature fields.

Geomechanics

Ongoing advances in parallel, cluster-based high-performance computing capabilities have given E&P professionals potent new geomechanical tools.

In the past decade, geomechanics and geomechanical simulation have addressed a number of complex, production-related challenges. To maximize productivity of mature fields, they use HPC to analyze borehole stability in more common and complex horizontal drilling situations, as well as to evaluate the effects on the stability of surface facilities from subsidence caused by production.

HPC is used to better understand gas and fluid injectivity, CO2 and complex fluid flooding in mature field settings, as well as CO2 sequestration and fault reactivation. More powerful and cost-effective computing is used to optimize production from reservoirs where properties change continuously during production, rather than being static, as assumed in conventional analysis. Prediction of reservoir compaction is particularly critical to improve the management of pressure and fluids in deepwater, high-porosity assets.

New algorithms that leverage Matrix Free solver technology pioneered by Anatech Corp. enable massive parallelization of highly complex geomechanical simulations. Problems in high-fidelity simulations that once had practical computing limits of 500,000 elements (which would encompass just a small corner of a large offshore field) can now be addressed, allowing engineers to accurately simulate an entire field in a matter of hours.

Today’s more robust parallel cluster technology is integrated with a new generation of modeling software. Together these technologies support faster, easier, more accurate modeling of subsurface mechanics. Clustered HPC running advanced modeling software can meet a number of field development challenges. E&P companies can apply geomechanical analysis to current geological models to gain returns from models that are already built and in use.

Example: Niger Delta

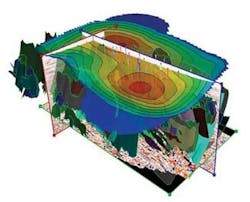

In one notable use of the cluster-based HPC approach in an offshore environment, Netherlands-based TNO worked with JOA Oil and Gas to create an integrated reservoir model of the complex, highly faulted Niger Delta region.

The stacked pay zones common to many Niger Delta fields challenge traditional software and computing solutions. The region’s complex tectonics feature multiple, narrow, conjugate faults which often forced engineers to break up prospective modeling sequences into a series of intervals, or to discard entirely some faults from the model.

To understand these geomechanics, planners sought a state-of-the-art approach to modeling and simulation. They chose to combine well-established TNO DIANA technical analytics software with the JOA Jewel Suite modeling and simulation system, all running in a Microsoft Windows-based networked-cluster computing environment. A Matrix Free solver ran fast, accurate finite element and difference simulations on full-field geomechanical applications to allow engineers to analyze complex and dynamic offshore assets in the Niger Delta.

Specifically, this cluster-based HPC approach evaluated the impact of a projected three- to five-foot subsidence on the geomechanics of this offshore field, on the configuration of drilling platforms, and on other production activities as the field was brought online over a period of years.

Putting affordable supercomputing at or near the desktop, this workflow-based solution gave Niger Delta production planners the ability to update models with new well or geologic information, and to translate complex geometrics into conventional reservoir simulation packages. The solution also can model large depth intervals in a single run. If needed, engineers can integrate reservoir-scale compaction with modeling of subsidence at the surface to gain a more comprehensive understanding of the geomechanics.

In addition to the geomechanical modeling being done on the Niger Delta, this TNO DIANA solution also may be applied to other exploration and production applications, including carbon capture/storage, smart history matching, and field optimization.

Dynamic uncertainty management

Dispatching the right personnel and equipment at the right time typically is the most important variable affecting the cost, safety, and operational efficiency of any field development. E&P managers can apply cluster-based HPC and advanced software to manage uncertainty in a variety of situations.

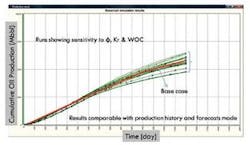

Dynamic Uncertainty Management (DUM) can formulate more precise asset quantification, giving producers a clearer picture of how much additional oil might be produced at a measurable financial return from an asset. In mature production environments, where water flood or gas lift are likely for pressure support, and where traditional static modeling may not be adequate, dynamic reservoir behavior modeling can help maximize those assets.

A well-designed approach to DUM allows companies to better identify and analyze high-value data directly related to the dynamic behavior of a reservoir to avoid the time and cost of evaluating lower-value, less-relevant information. This also can provide a clearer understanding of initial reservoir conditions and historical reservoir performance, particularly in fields developed as long ago as the late 1950s.

As the creation of a single, shared Earthmodel approach allows a direct link between geologic modeling and dynamic simulation, current geological models (in which multiple modeling scenarios have been used to analyze lithologies and porosities for several years) can be evaluated using dynamic uncertainties such as permeability, fluid contacts, and water saturations. Cluster computing and smart interfaces make multiple runs possible and make dynamic simulation output easier to understand. Presenting that output in a graphical interface allows assets to be quantified more precisely as to reserve size and ability-to-produce.

Assets previously ignored or under – produced can be evaluated more closely for cost, production potential, and as candidates for gas lift or other production methods. Cluster-based HPC and case management interfaces can help study well placement and injection schemes for more informed production decisions.

As enhanced recovery becomes an element of early-stage production planning (while the dynamic behavior of the reservoir is poorly understood), identifying uncertainty is critical. Using Monte Carlo Simulation in a cluster-computing environment, engineers can examine a number of scenarios and combinations to identify the high-value data needed to minimize uncertainty in future production.

Because older reservoirs may not have been well measured or documented, the ability to quickly analyze multiple scenarios helps us better model initial reservoir conditions, and thus make more accurate predictions. So History Matching exercises can be completed in a fraction of the time currently required. By moving from a traditional linear to a more efficient non-linear computing approach, engineers can save time and money while achieving more accurate results.

How HPC works

To achieve their most fundamental business objectives – making fast, reliable decisions that produce lower risk and higher returns – oil and gas companies must sift through growing mountains of data. To do that, powerful, affordable supercomputing must be available to end users, particularly when doing seismic analysis, reservoir simulation, or uncertainty management.

The good news is geoscientists, engineers, risk managers, and other end users can now harness this proven cluster-oriented, server-based HPC environment to distribute work across multiple connected servers linking local workstations, departments, and even companies.

Windows HPC Server 2008 puts supercomputing performance and scalability at the fingertips of end users. Because they “time share” a cluster of servers, they can do more work in less time with greater accuracy, using applications that cannot be managed on a single technical workstation. Because wizard-style tools are used for remote node installation, updates and operational management, creating and using a modern HPC cluster is simple.

This integrated approach also allows users to access HPC clusters via direct network access from a desktop, virtual private network (VPN), or web-based portal, giving technical personnel anywhere/anytime access to these resources.

Today’s next-generation computer cluster HPC solutions work in conjunction with modern Graphical User Interfaces (GUIs) that are familiar to most computer users, as well as with popular desktop applications such as Windows Outlook and Excel. Cluster-based HPC also is suited to run tried and tested reservoir simulation applications such as Sensor and Computer Modeling Group’s suite of reservoir applications, as well as geomechanical applications such as TNO DIANA.

From a broader IT perspective, the Microsoft approach to cluster computing allows companies to leverage existing Windows infrastructure, including security, Active Directory, and enterprise management tools. Those common features allow users to leverage their existing skills, and help drive greater productivity and cost reductions.

This approach is also cost effective when compared to building a third party Linux solution, because Microsoft provides a job scheduler, performance monitor, and other cluster-oriented administrative and deployment solutions for a single solution price. Companies can leverage this capability to eliminate isolated HPC islands and to parallelize many existing Windows-based compute intensive applications.