Computing power drives exploration advances

Gene Kliewer, Technology Editor, Subsea & Seismic

The increase in dataset size that comes with multi-azimuth seismic acquisition slows computer processing of that data for interpretation. Also, the growing application of real-time data monitoring of upstream drilling and production activities demands more computing power than in the past. Computer systems are being developed to address these problems and to shorten the time between data acquisition and interpretation.

Parallel processing improvements and the integration of graphic processing units to work with central processing units have pushed the capacity of desktop workstations to the level of supercomputers.

On the hardware front, NVIDIA has developed a GPU-based “personal supercomputer,” Tesla, which has the equivalent processing capacity of a traditional CPU computing cluster. These are priced like conventional PC workstations but give 250 times the processing power, says NVIDIA.

As a reference, OpenGeoSolutions applied a workstation with an NVIDIA Tesla C1060 GPU Computing processor based on the CUDA parallel computing architecture to one of its interpretations. Spectral decomposition technique results are improved by using large regional datasets. In addition, the data also is inverted to translate it into geologic structures. Both steps require powerful processing capacity to accomplish in a reasonable time.

“We are measuring speedups from two hours to two minutes using CUDA and the Tesla C1060,” says James Allison, president of OpenGeoSolutions. “This kind of performance increase is totally unprecedented and in a market where there is great economic value in being able to determine these fine subsurface details, this is a game changer.

“More importantly, the Tesla products essentially give us all a personal supercomputer. Just one Tesla C1060 delivers the same performance as our 64 CPU cluster, and this was a resource we had to share.”

“We have all heard ‘desktop supercomputer’ claims in the past, but this time it is for real,” says Burton Smith, Microsoft technical fellow. “Heterogeneous computing, where GPUs work in tandem with CPUs, is what makes such a breakthrough possible.”

Illustrating its position in desktop supercomputing, Microsoft Corp. moved into the Top 10 of the world’s most powerful computers in the recent Supercomputing 2008 rankings. Microsoft ranked No. 10 with the Shanghai Supercomputer Center and Dawning Information Industry Co. Ltd. facility. That installation recorded 180.6 teraflops, the parallel computing speed, and 77.5% efficiency. It uses Microsoft’s Windows HPC Server 2008.

The advantages to this processing power and speed include the opportunity to maximize IT resources, says Microsoft. Windows HPC Server 2008 can integrate with existing infrastructure and has management tools to make the deployment of high performance computing relatively simple.

Windows HPC Server 2008 allows oil and gas firms to port and write new applications for clusters to keep up with evolving industry needs.

Many critical applications, including reservoir simulation and fluid dynamics, are bound by the limitations of the workstation. Windows HPC Server 2008 can scale from workstation to clusters as processing demand grows.

Repsol YPF has taken another direction to address its high-power computing requirements with the introduction of its Kaleidoscope Project. The key to Kaleidoscope’s value is reverse-time migration (RTM). The drawback to RTM has been its immense dataset. Repsol’s solution is to make a supercomputer with off-the-shelf hardware. Using IBM Power XCell 8i processors in a scalable Linux cluster, Repsol has reached 120 teraflops. This is equivalent to 10,000 Pentium 4 processors, the company says.

The RTM algorithm used in Kaleidoscope is the result of collaboration among Repsol, the Barcelona Supercomputing Center, and FusionGeo Inc. FusionGeo is a new company formed by the recent merger of Fusion Geophysical LLC and 3DGeo. The initial RTM work came out of Stanford University’s Stanford Exploration Project, an industry funded consortium organized to improve the theory and practice of constructing 3D and 4D images from seismic sampling.

“Our work with Repsol on Kaleidoscope is only the start of what we envision to be broad range of totally new integrated imaging and reservoir technologies and innovation that will be created in Kaleidoscope,” says Dr. Alan R. Huffman, CEO of FusionGeo. “These technologies will include new analysis tools for geopressure prediction and fracture detection with 3D seismic, advanced applications of land statics and waveform inversion, and full scale integration of these technologies at the reservoir scale to enhance the characterization of the reservoir during exploration and improve the performance of the reservoir during production.”

The Kaleidoscope supercomputer began operating in October 2008. With a 22-sq ft (2-sq m) footprint, the base cluster is 300 IBM blades distributed into eight racks. The organization within the racks was designed to optimize the RTM algorithm. Using a six-blade computational unit, Repsol says 50 data blocks can be processed simultaneously across 50 computational units.

Parallel processing also contributes to Kaleidoscope as follows:

- Grid level: All the shots in a migration algorithm could be processed in parallel. The different computational nodes of a cluster could be used to run simultaneously different shots. This is called the grid level parallelism and Kaleidoscope uses the Grid-SuperScalar program. The parallel efficiency is 100%.

- Process level: Each shot requires some hardware resources. If one computational node of the cluster cannot handle it, the shot execution must be split among nodes using domain decomposition techniques. The scalability of this is limited by the use of Finite Differences. Moreover, at this level, users must manage the input/output (I/O)needed by RTM. Using asynchronous IO and checkpointing minimizes the IO time in a RTM execution.

- Thread level: In today’s supercomputers, a single computational node use to be a shared memory multiprocessor. To use all the processors efficiently in a computational node Kaleidoscope uses OpenMP programming. This allows use of all the memory in a single node for a single shot or for a domain from a single shot.

- Processor level: Because migration algorithms use to be limited by memory bandwidth, it is critical to minimize the cache miss ratio of the computational kernel. This is accomplished by blocking algorithms.

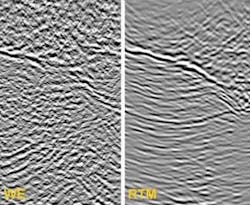

The new-found capacity to process data in a timelier and less expensive manner is leading to other exploration advances. For example, Paradigm has introduced its EarthStudy 360 product which gives angle domain seismic imaging with the ability to decompose the seismic wavefield at subsurface image points into angle domains for a new perspective on the subsurface. EarthStudy 360 can generate continuous azimuth, angle-dependent subsurface images (full azimuth angle gathers) using the full recorded wavefield. It generates and extracts high-resolution data related to subsurface angle-dependent reflectivity with simultaneous emphasis on continuous and discontinuous subsurface features such as faults and small-scale fractures. Because angle-gathers carry both full azimuth reflection (amplitude) and directional (dip and azimuth) information, geoscientists are able to generate and work with a new set of deliverables for uncovering physical and geometric properties of the subsurface.

“Full wavefield and full azimuth is not simply seismic interpretation decomposition imaging,” says Duane Dopkin, senior VP of Technology for Paradigm. “It gives new ways to interact with data and new visualization perspectives, and makes use of all the data.”

New partnerships are coming out of this drive for more computing power.

SMT, for example, has become an Independent Software Vendor (ISV) partner with Hewlett-Packard (Canada) Co. Through this partnership, HP Canada will supply PC-based hardware, workstations, high-end graphics cards, and high-end mobile workstations to SMT customers to maximize the performance of SMT’s seismic interpretation software.

“With the increasing demand for exploration of oil and gas and the complexities of new oil fields, the need to maximize geoscience technologies is critical,” says Brian Kulbaba, country manager at SMT Canada. “By offering KINGDOM on one of the top hardware platforms today, we are enabling geoscientists to handle even larger amounts of seismic data and increasing their productivity.

“When users are loading six-figure well data, a common practice in our industry, a fast machine is essential to the interpretation and decision-making process. KINGDOM on HP hardware will significantly increase productivity on the desktop, while reducing the cycle times and lowering exploration and operating costs.”

SMT KINGDOM operates on all 64-bit and 32-bit Windows-based platforms available through HP Canada. Customers who wish to migrate to a 64-bit environment also have the ability to simultaneously run 32- and 64-bit applications.